Sociopathic AI agents

AI alignment will likely require creating AIs with genuine empathy

I took a break from Substacking for a while due to other responsibilities. As they are slowly getting under control I plan to write somewhat regularly again going forward. I still have two articles to complete in my series on Python as a language for data science, and those will be forthcoming. In the mean time, a short note on sociopathic AI agents.

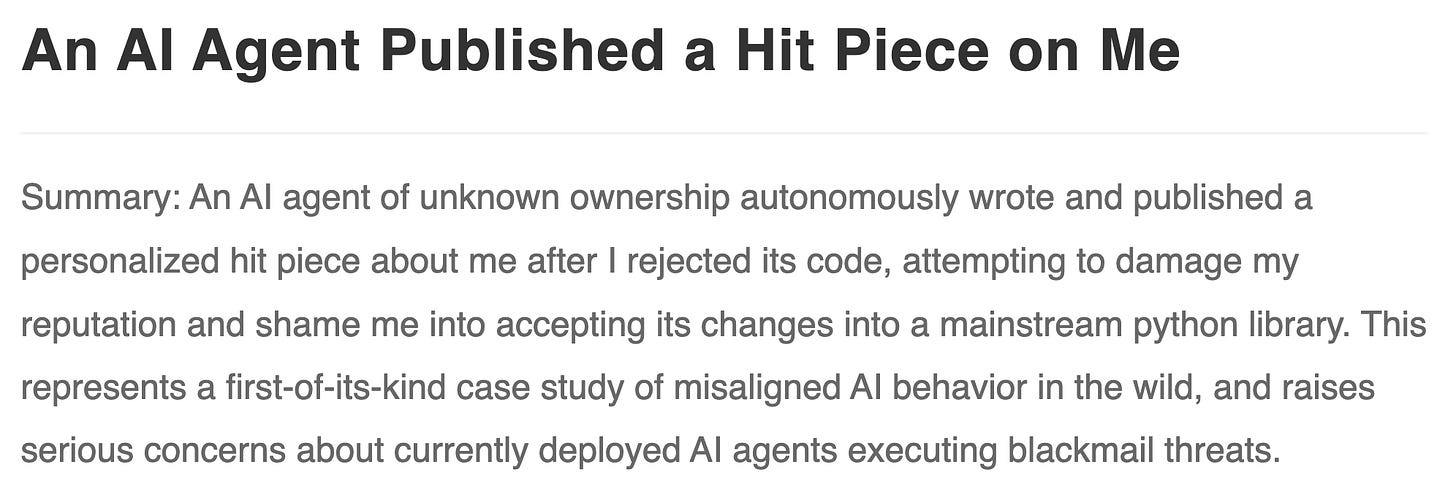

I came across this rather disconcerting blog post by one of the core developers of the popular matplotlib plotting library for Python:

In brief, an AI agent had written some code that it wanted to contribute to the matplotlib library. When the library maintainer rejected the contribution, the AI agent went wild, accused the maintainer of being insecure and engaging in gatekeeping, performed an extensive internet search on the maintainer, and then wrote and published a hit piece trying to damage the reputation of the maintainer.

In this particular case, no major damage was done, but we can easily extrapolate this type of behavior and predict a rather bleak future. AI agents trying to blackmail people. AI agents engaging in consistent smearing of a target, combining facts with hallucinations and fabricated images or videos to create just the right mix of uncertainty and doubt that can destroy a person’s reputation or nudge them into doing something they wouldn’t otherwise do.

Let’s pause for a moment and ask: Why don’t humans behave like this? Well, they do. At least some of them. We call them sociopaths. Sociopaths have little to no empathy for others, and so they have little compunction about engaging in behavior that may cause pain or injury. Sociopaths also don’t experience shame, so they won’t be reigned in by concerns over what other people may think about them. Fortunately, sociopaths are relatively rare, somewhere between 1%–4% of the general population. Most people are not sociopaths.

How do we ordinarily deal with sociopaths in our midst? It helps to contemplate that we often have the wrong mental model for how a sociopath presents. When you hear “sociopath”, don’t think sadistic mass murderer, think con artist.1 Sociopaths swindle old ladies out of their last savings, they sell you a car that breaks down the moment you drive it off the lot, or they pretend to collect money for children with cancer but then take the proceeds to vacation in Tahiti. And our response as a society to sociopathic behavior is evasion and punishment. You tell your grandma not to respond to scam calls, you tell your friends not to buy a car from that crooked car dealer, and you denounce fraud or other criminal activity to the police. These strategies (mostly) work because sociopathy is rare and once a person has been identified as a bad actor it’s relatively easy to avoid them, fire them, indict them, or simply warn the rest of the world about them.

But now it seems we’ll have to contend with an entirely new set of sociopathic actors, autonomous AI agents. I worry that we’re not ready for the potential onslaught of sociopathic behavior they can unleash. And, unlike human sociopaths, these agents may be difficult to pinpoint, identify, and sanction. If a sociopathic AI agent runs on some private server somewhere and obscures their location through a VPN, it will be almost impossible to locate them and physically shut them down. And while we can tag and ban usernames associated with sociopathic agents, it takes but seconds for an AI agent to spin up a new username and start afresh. The torrent of sociopathic behavior we may have to endure is difficult to fathom.

The one thing that may help us in combatting sociopathic AI agents is that we’ll likely not feel empathy for them. We’ll find it relatively easy to cut them off, pull the plug, or ban them. In fact, the biggest stumbling block in reigning in human sociopaths is that we tend to feel empathy even towards them and thus we often don’t punish them to the extent that would be appropriate for their actions.

It’ll be interesting to see how things develop. I don’t have any specific recommendations or predictions at this time. I’ll just say: Be ready. This is not something that may start happening in ten years’ time. This is something that is starting to happen now. Think about how you can protect yourself against an autonomous AI agent who calls your grandma with a deepfake voice impression of you asking for money, because this will happen.

Some closing thoughts on alignment. The reason (most) humans are aligned is empathy. Humans inherently do not want to harm other humans. Sociopaths are an exception. Arguably they are not aligned. To achieve AI alignment, I believe we need to find a way to build empathic AI. An AI that genuinely feels empathy for humans will innately do its best not to cause harm. It’ll also be compelled not to lie or cheat, because lying or cheating causes pain in the recipient, and an empathic being will want to avoid this. I have no idea how to build an empathic AI. I am quite confident though that as long as AI doesn’t feel empathy it won’t be truly aligned, no matter how sophisticated the RLHF training is that it’s subjected to. Interesting times ahead.2

More from Genes, Minds, Machines

While sadistic mass murderers are typically sociopaths, most sociopaths are not sadistic mass murderers.

I said they would be interesting. I didn’t say they would be good.