Random seeds and brown M&Ms

Your first mistake was assuming people actually understand how random numbers work.

My recent post about random seeds generated extensive discussions about best practices in random number generation. This is great. The more people are aware of the unexpected pitfalls the better. However, I received some pushback I found rather surprising. More than one person, and mostly people with extensive training in statistics, strongly argued that the random seed is arbitrary, and therefore 42 is fine. If your results depend on the random seed, they said, you have a bigger problem.

Let’s dissect this statement carefully. “If the results depend on the random seed you have a bigger problem.” I agree. But here’s the issue. How do you know? If you always use the same random seed, you’ll not realize your results depend on the random seed, because you’ll always get the same results.

A trained statistician might say, “That’s silly, why would anybody do this?” but that’s exactly my point. People who unquestioningly set their random seed to 42 may mess up their analyses in other ways. A random seed of 42 is the brown M&Ms of machine learning and computational modeling.1 The intersection between people who always use random number generators appropriately and those who routinely set their random seed to 42 is extremely small.2

Let’s imagine this conversation between John, a student, and Martin, an experienced machine-learning expert.

“Hey Martin, I’ve run my model five times. I get an accuracy of 98% on the test data every time. My model performs great,” says John.

“That seems too good to be true,” Martin responds. “What’s your performance on the training data?”

“Oh, it’s only about 70%. The model seems to generalize really well.”

Now Martin is getting worried. “You ran the model multiple times, and you got 70% on the training data and 98% on the test data? Did you use the same training–test split each time by any chance?”

“Absolutely not,” John retorts. “I generated a new random training–test split each time, as described in the scikit-learn documentation, using their exact example code.”

Martin is increasingly confused. He hasn’t read the scikit-learn documentation in a while, and so has no idea what it says. He asks John to pull up the documentation.

John pulls out his laptop and says, “Here it is, the example code from scikit-learn:”

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.33, random_state=42)Martin looks at the example code and says, “But you did change the random state, right?”

“I did not,” says John, now starting to wonder whether he should feel embarrassed about having made a stupid mistake or proud about having thought things through really well. “I didn’t want to give the impression that I cherry-picked my analysis by choosing a specific random seed, so I stuck with the default provided in the documentation. It’s from the Hitchhiker’s Guide. Many people use it.”

If you think this dialog is completely unrealistic then I’m sorry, you need to spend less time with your computer and more time with people.3 Random number generation is an obscure technical topic that most people don’t know much about. Even people who routinely do data analysis or machine learning are not necessarily well informed about how exactly random number generation works. That’s why I wrote my previous post in the first place.

Similarly, I gave my recommendation of not explicitly setting a seed at all because I know how people operate. Yes, this choice sacrifices some reproducibility, and doing something like generating a true random seed and writing it into a log file would be better, but all of this is additional mental overhead that for a good fraction of people will simply be too much. Anybody who has taught students knows that if you provide example code with a seed, some fraction of people will use your code as written and not change the seed. It doesn’t matter how often you say “change the seed.” If your code contains a seed, people will end up using that exact seed, every time.

There’s one more issue. In particular when you’re coding with scikit-learn, you need to set random seeds all over the place. Every single function that has random behavior has its own separate random number generator. So you can quickly face the situation where you need many random seeds. Want to do a train/test split? Please provide a random seed. Want to do a t-SNE? Please provide a random seed. Want to do a PCA? Please provide a random seed. Want to fit a random forest model? Please provide a random seed. You will quickly run into decision fatigue where you won’t have the energy to come up with new random seeds everywhere. You could set up an elaborate scheme where you have a master random number generator which you use to generate random seeds for each step of your analysis, but come on, nobody is going to do this. So I still think it is better to get into the habit of not setting a random seed at all and instead relying on the system random noise the library uses by default.

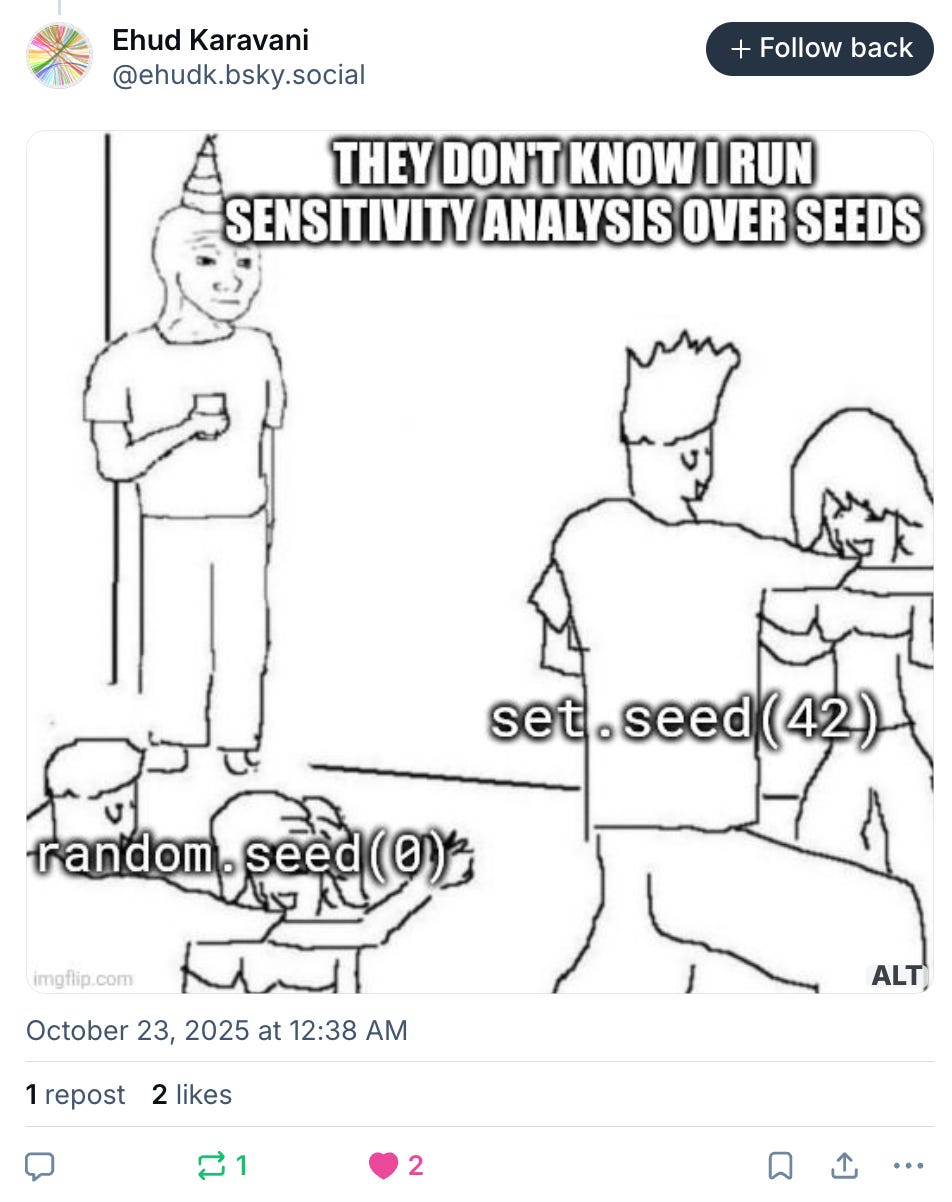

Let me end with this post on BlueSky by Ehud Karavani. It captures the right attitude. This is what you should be doing.

If brown M&Ms don’t mean anything to you, read this (true) story about how the rock band Van Halen would demand no brown M&M’s in the backstage area. This demand was meant purely as a test to see whether the production company had actually read the entire contract and could be considered reliable.

I’m not discounting that there are a handful of people who routinely use a seed of 42 when it won’t cause any issues and yet appropriately use a range of different seeds when it matters. They’re probably the people writing the scikit-learn documentation.

And let me just emphasize that if you see yourself in John, I don’t think John is a bad student. He’s probably a very good student. He just doesn’t know much about how random number generation works. That’s fine. There are more things to know than any one person can ever absorb. Everybody has knowledge gaps somewhere.

Thanks again for the good math.

If you want to have serious fun, correct a redditor's bad arithmetic by informing them that 0.45% = 0.0045.

And then sit back while five or more people try to tell you that you're wrong.

Another good article. My only comment is that I never heard the Van Halen story but it reminded me of a distant memory. A rapper (I think Wiz Khalifa) came to town and I remember someone telling me he had a provision that he couldn't have any M&M's of a specific color, I think green not brown, and we laughed it off as pampering. Whoever it was, and whatever color, guess I shouldn't have been quick to judge!